Published on September 19, 2025 by Rohan Fernando

Speed and stability rule the battlefield

Modern financial markets are more like battlefields – it’s a race for speed, accuracy and decision-making, making RUST in finance a timely and powerful solution. The time in which delta that can decide a winner or loser in capital markets is measured in microseconds or milliseconds. Your algorithm may be best-in-class, but if it does not act in time, it’s your loss. Today, we compete not with ordinary algorithms or humans but with much faster high-frequency trading bots, often backed by clusters of FPGAs. Every millisecond matters, and the stability and reliability of your system play a key role.

Why optimisation still matters

In the modern world, there are many options, many technologies and massive cloud infrastructures. With hardware getting cheaper, many developers aren’t overly worried about code optimisation, memory use or process utilisation. However, in capital markets and in specific real-time applications, how well your code is optimised, how fast it can access memory and low latency matter a lot. Writing this code and choosing the right technology is not only a capability but also a passion and an art. Modern software engineering may not put a lot of effort on optimisation due to the amazing hardware accelerations and mature software frameworks, but when it comes to low latency requirements in capital markets, good old optimisation techniques still matter a lot.

From C++/Java to RUST: a new contender

There are a few choices for this: C++, Java or other technologies that fit, but the developer’s effort is another aspect to consider. These technologies dominated financial real-time apps, but we now see an avenger rising to join the battle. This is RUST a language now gaining traction in financial technology. In fact, RUST in financial technology is proving to be a game-changer for trading systems. It was not designed specifically for trading technologies, but the compiler and its design feel meant for low-latency capital markets applications. Development effort was not as much as it was for C++ but also not as little as it was for Java. The echo system is not as mature as it is for C++ or Java, but it is a growing echo system, and for a specific performance-related problem, it could be the solution and with less effort, as the language itself is designed to support solutions requiring less effort.

RUST is deterministic by design, and it is difficult to imagine unpredictable performance issues because it helps programmers avoid mistakes. This yields predictable execution times with no garbage-collection pauses and no unexpected memory behaviour. The compiler protects developers from common errors, borrow-checking prevents data races and zero-cost abstractions keep code clean while delivering native speeds. Because trading apps are heavily concurrent, RUST gives developers the confidence to create complex concurrent code.

Areas where RUST has helped us

RUST’s armoury has several weapons to help developers build robust, fast, real-time applications ready for an extremely fast-paced trading business. We highlight a few of these features from among the many capabilities RUST brings to the table. We look at only three areas in this blog – speeding up data pipelines, accelerating calculations and building a lightweight, faster UI with native connectors. We will discuss the rest in our next blog.

1) Faster data pipelines with Polars

When it comes to data pipelines, one framework we cannot ignore is Pandas – mature and used everywhere. However, Pandas is not the fastest; it can slow the entire pipeline, especially those with a number of transformation steps. RUST has a solution: Polars is a library built with RUST for performance. Because it is written in RUST, it offers native CPU speed, zero-cost abstractions and no garbage-collection pauses. It uses the Apache Arrow columnar format for cache-friendly SIMD operations and zero-copy data-sharing. Its lazy execution engine optimises queries with predicate pushdown, projection pruning and parallel execution across cores. This makes Polars much faster. Results of the basic statistical testing we conducted speak strongl (Fernando & , 2025). Polars can outperform Spark in certain operations: if datasets are smaller than 10GB, Polars can really shine, especially with fewer cores ( ource: (Cole, n.d.)(Cole, n.d.) ). Another advantage is that Polars is well supported in Python and other technologies, giving RUST a significant advantage.

2) Vectorised math and native parallelism

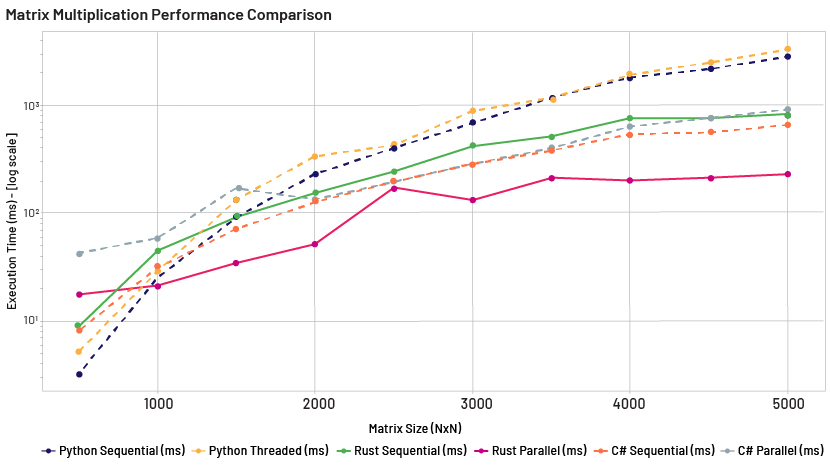

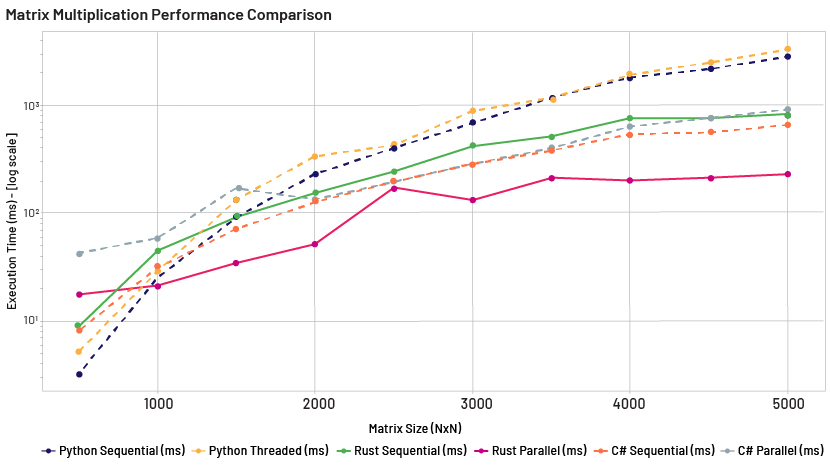

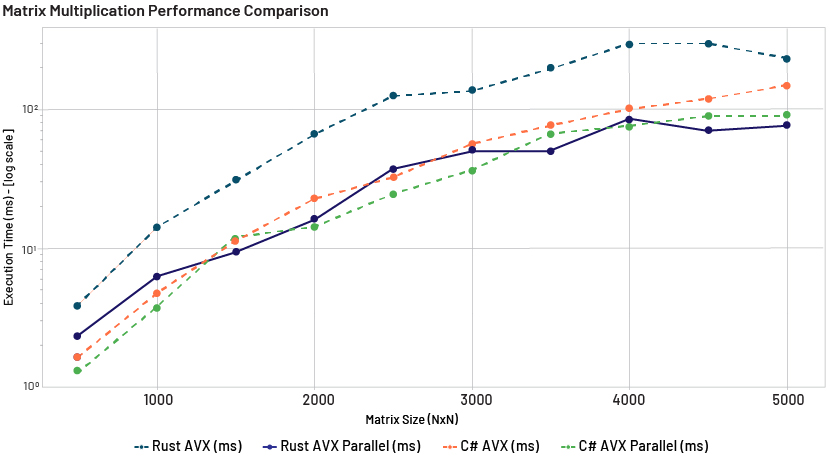

Another area in which RUST can really power up your code is complex algebraic calculations, especially vectorised ones. RUST brings native power with AVX-backed SIMD operations, making vector operations extremely fast. This helps perform large calculations at speed; thanks to Advance Vector Extensions (Intel, n.d.), most modern CPUs provide wide vector registers (up to 512-bit), making vector calculations much faster. There are limits: for very large vectors, a GPU can outperform this, but at the cost of context switching. We tested large matrix calculations with RUST, and it delivers the speed required by real-time pricing engines. It provides true parallelism – one of the best ways in which to tap the raw power of a CPU. RUST gives better control over hardware and memory, e.g., pinned threads offer tighter control and raw performance. Multi-threading is a key area in which RUST can deliver a significant amount of performance with better control and reliability (without data races). When it comes to pricing engines and components that require a number of mathematical operations, RUST really shines, running at native speed.

The chart above shows how different programming languages perform on large vector operations. Further to this, we tested with AVX capability, where RUST brings significant performance improvements; we will discuss this in our next blog.

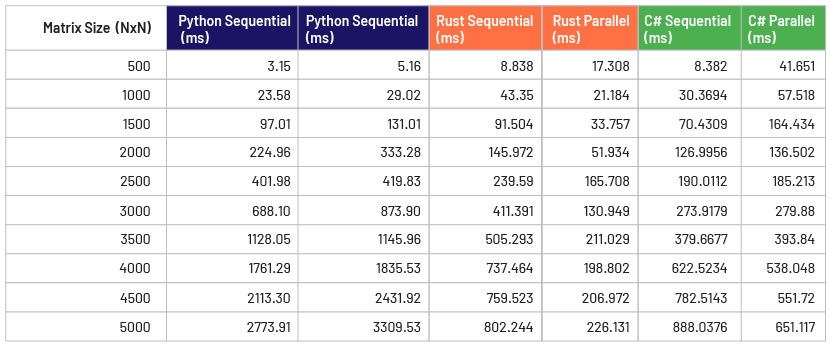

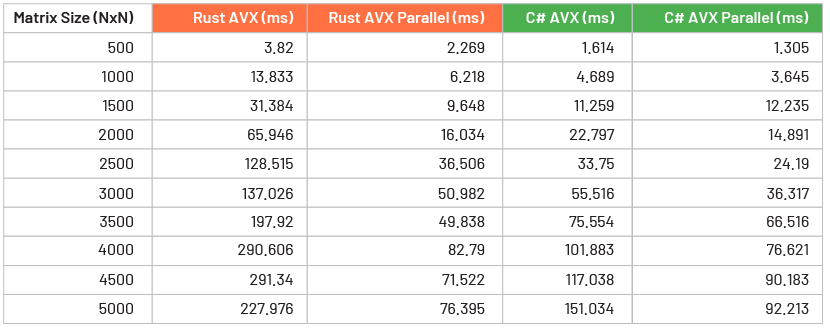

Note: Experiment – Matrix dot product

This study benchmarks the performance of dense matrix multiplication, specifically computing C=A⋅B, across Python, RUST and C# (.NET) using various execution modes. Python supports sequential and threaded implementations, while RUST and C# offer sequential, parallel, AVX and AVX-parallel modes. Each method calculates matrix C by performing dot products between rows of matrix A and columns of matrix B.

Tests were conducted on uniform x86-64 systems featuring 12th Gen Intel® Core™ i7-1255U processors (10 cores, 12 logical threads), 16 GB RAM and NVMe SSDs. Matrix sizes ranged from 500 to 5,000, and each method-size combination was tested with 180 trials. Sequential modes ran all trials serially, while parallel modes distributed them evenly across logical processors.

Execution time was measured in milliseconds. Results were visualised using a logarithmic y-axis to clearly compare performance across both slower and faster implementations.

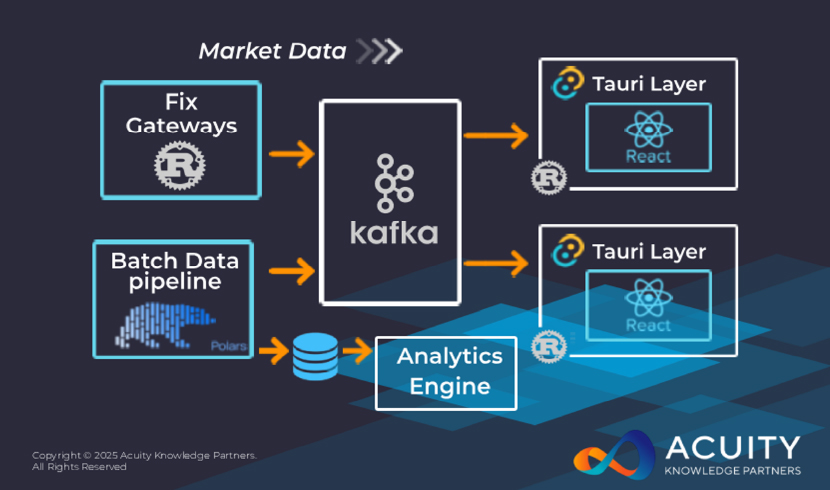

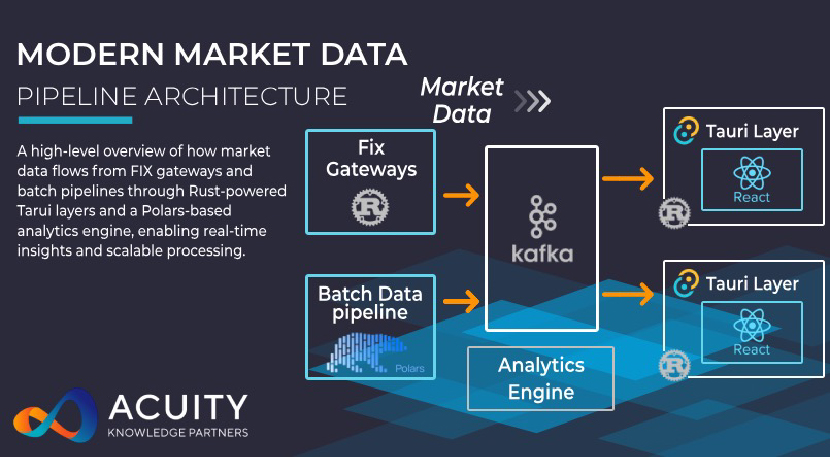

3) Lightweight desktop UIs with Tauri + React

Another area in which we have used RUST is in building fast, memory-optimised, desktop-based front-end applications with React. There are multiple frameworks for React desktop apps Electron, OpenFin and even React Native. All of these have their own limitations, and these apps are not lightweight, because they embed browser capabilities, essentially Chromium. You typically wire these to your back end with WebSockets. We took a different approach with Tauri (a RUST-based open-source framework), which uses WebView to run your React application as a desktop app. This gives you the raw power of RUST to integrate your application. If connecting to Kafka, you do not need to rely on WebSockets; you can use native connectors. React communicates with your RUST layer running on the desktop (as the wrapper), giving you substantial control and customisation.

One challenge we see with this approach is that, despite the massive performance gains and low memory use, some features such as multiple screens, layout saving, SSO integration and inter-window communication are missing. However, since it is open source and RUST-based, you can custom-develop all these features, and the effort required is not much. This gives you the power to have your own faster, reliable, memory-optimised front-end layer with React, running inside a RUST container. For multiple screens, Tauri supports them; add a memory layer, and you can easily make screens communicate with each other. Multiple screen layouts can be enabled as well; each screen is a collection of vectors, so storing this data and bringing it back into Tauri enables layout restoration. Because Tauri is based on RUST, any customisation is possible. The main point is the speed it brings; you can also move heavy front-end computations into the RUST layer, offloading React and executing at native speed.

Reference components on how RUST can help some of the trading workflows – from data pipelines to embedding React UIs for high-performing responsive front ends.

RUST for high-stakes systems

RUST is not just another programming language but one designed for raw speed and fearless concurrent programming. It encourages programmers to use fundamental core concepts and write good, old-school, robust, highly optimised code. It is meant for developers who are not intimidated by hardware, who are fearless about saving each millisecond and who are willing to work directly with the underlying hardware without relying on abstract runtimes. RUST programmers are like elite special forces, operating deep in the system, executing precise, high-stakes missions where every instruction counts and there is zero margin for error. It is a language programmers can truly enjoy, line by line.

How Acuity Knowledge Partners can help

Our Data and Technology Services team works with many different solutions and offers the most suitable and reliable technologies to clients. As we help clients with low-latency solutions, RUST has become one of our go-to languages for performance. Wherever we need speed in milliseconds, we use RUST for the backbone work. Our engineering teams are trained to write highly optimised codes; they continue to explore technologies/languages such as RUST to challenge the limitations of hardware. We currently see three main areas in which RUST helps us: in building low-latency solutions where we need extremely fast processing; in highly optimised mathematical solutions, more like building core quantitative algorithms; and in helping clients optimise their data pipelines, where we replace Pandas with much more robust Polars (built with RUST). This helps pipelines become more robust and far faster without relying on hardware.

Results

The charts clearly show that Python implementations are the slowest from matrix sizes 2,000 upwards. In contrast, RUST and C# implementations demonstrate significantly better performance, especially when enhanced with parallelism and Advanced Vector Extensions (AVX). Notably, while both RUST and C# AVX Parallel performs equally well, RUST AVX Parallel slightly outperforms in the largest matrix sizes (4,500 and 5,000), indicating better scalability in extreme computational scenarios. This suggests that for very large-scale matrix operations, RUST AVX Parallel may offer a marginal edge in performance.

Bibliography:

-

Cole, M., n.d. Should You Ditch Spark for DuckDb or Polars? [Online] Available at: https://milescole.dev/data-engineering/2024/12/12/Should-You-Ditch-Spark-DuckDB-Polars.html Fernando, K. & K., 2025. Polars vs. Pandas: A performance Showdown for Data Processing [Online]

-

Available at: https://www.acuitykp.com/market-guide/polars-vs-pandas-a-performance-showdown-for-data-processing/

-

Intel, n.d. Intel® Advanced Vector Extensions 2 (Intel® AVX2) [Online] Available at: https://edc.intel.com/content/www/us/en/design/ipla/software-development-platforms/client/platforms/alder-lake-desktop/

What's your view?

About the Author

Rohan helps Acuity Knowledge Partners Colombo identify technologies and involve in-system designing and implementation. Rohan holds a Master of Science in Enterprise Application Development from Sheffield Hallam University, a Bachelor of Science (Hons) in Information Technology from Middlesex University and has completed his Post Graduate Diploma in Data Science with University of New South Wales Australia.

Seasoned technology architect/ Leader with over 20 years of experience across software development in space of capital markets including enterprise application architecture, large scale system design, proven track record in building robust solutions around alternative data and driving end to end architecture for complex high performance platforms. Previously, Rohan worked at Virtusa Pvt..Show More

Like the way we think?

Next time we post something new, we'll send it to your inbox